This guide is closer to book length than article length (29,000+ words). Why so long? Because I wanted to cover everything you need to know about page speed optimization when working with a WordPress site. And, there is a LOT to know. My goal is that this one long guide will be all you will need. So, you may want to bookmark this and read it in chunks. How did I do? Let me know in the comments.

Introduction

You don’t want visitors to your site to become frustrated because it takes too long to load. You also want to please Google and the other search engines; having a fast, responsive, and optimized WordPress site can help in that regard. There are many tools that can help and I will discuss many of them. Special emphasis will be given to Google’s PageSpeed Insights (PSI).

When I switched this site to WordPress I had to learn a lot about the platform. I also had to choose a theme, find useful plugins, and learn how to optimize WordPress for speed and performance. Years ago I attempted to optimize my site with W3TC, but although helpful I never got stellar results. That is because I focused on only one tool without understanding all the factors involved in optimizing a WordPress site. As I learned more about how WordPress works, I decided that scoring a perfect 100 on Google PageSpeed Insights would be an interesting challenge. Well, I did it and now I want to share what I learned with you.

If you are reading this, you have probably heard about and maybe already used Google’s PageSpeed Insights tool. If so, you are probably frustrated with warnings given and are looking for ways to get rid of those and increase your score.

Regardless of your knowledge level, this guide will help you understand what’s involved in optimizing a WordPress site’s speed and performance. I will also show you ways to improve your site enough to ace Google’s demanding test.

In This Article

What is the Goal?

Before starting, I think we should stop and ask ourselves a fundamental question: what exactly are we trying to accomplish? Is the goal simply to please Google and hopefully reap SEO rewards for doing so? Do you have a personal mission to score a perfect PageSpeed Insights result at all costs? Is the goal to offer the very best user experience possible? It is likely a combination of all these things. A great PageSpeed Insights score should do the job, but sometimes we must pit performance against usability and functionality. Knowing where and how to draw the line for these trade-offs will depend on your (and your audience’s) preferences.

Can You Really Score 100 on Google’s PageSpeed Insights Test?

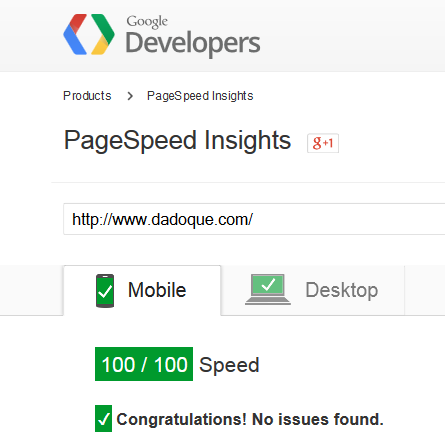

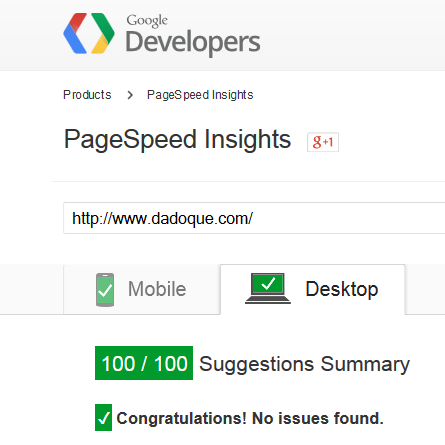

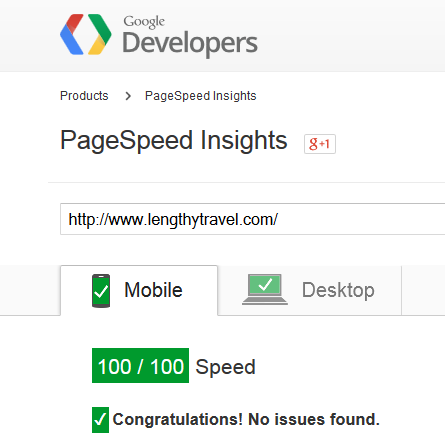

If you read articles on the topic, you notice that not many people actually managed a perfect PageSpeed Insights score. Some even claim it is impossible. Well, it is possible and I did it with three sites.

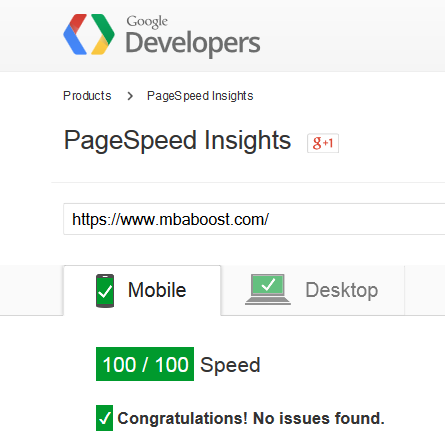

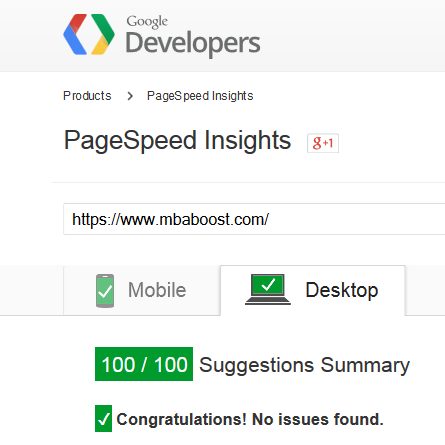

Of course, you should be skeptical of my claims, so here are a few screenshots (from an older version of PSI) as way of proof. My Dado Que website:

My MBA Boost website:

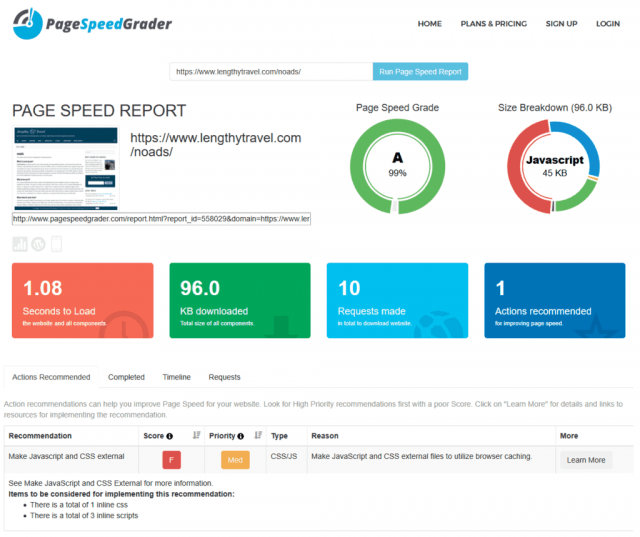

This Lengthy Travel website:

I achieved the perfect scores without any special server setup or technical wizardry. WordPress-optimized hosting companies do exist but I was not using one when I first wrote this guide.

A Content Delivery Network (CDN) can be very helpful and I use a free one on this site but not on the other two sites. Speaking of free, all of the optimization changes I use are completely free.

Finally, lest you think I started with a high score and didn’t have far to go, that is not true. My initial PageSpeed Insights scores ranged from 58-59 for mobile and 54-74 for desktop.

Beware What Your Read Online

This is probably not the first article on the topic you have read. While reading experiences and tips from others is a good thing, I advise you to be wary of what your read. Here’s why.

First, each site is unique so it is quite hard to get an apples to apples comparison or prescription. Think a plugin sounds great? You will find people who rave about it and people who claim it killed their site.

Second, many articles fail to give sufficient details about how and what they tested. Take load time as an example. Which test did they use and from what geographical locations? In other words, where are the host server and the testing server located? Did they list an averaged time or a single (best) measure? What plugins or other tools did they use? What day of the week and what time of the day? Which page did they test and what content did they include or exclude? If they used a plugin, what settings did they use? There aren’t right or wrong answers to these questions. But, without knowing them you are not getting a complete picture and it is difficult to compare to the specific situation you are facing or replicate the suggestions offered.

Third, what results are they showing? I recently read a well-written account of someone’s PageSpeed Insights efforts that only showed the desktop result. In the comments someone asked about it and learned that mobile actually scored quite poorly.

Finally, what tools and techniques did they test? There are many to choose from and it is almost impossible to test them all. It is unwise to say a plugin is the best solution if you haven’t compared it to alternatives. Yet, many folks do exactly that.

None of the above means to imply that you shouldn’t read other articles about optimization. They often are instructive and, while not every tool is tested and compared, after enough reading you have a good idea of which tools to try.

Testing Tools

“Certain people fall prey to the idea that pages with HIGH Page Speed scores should be faster than pages with LOW scores. There are probably various causes to this misconception, from the name—to how certain individuals or companies sell products or services.” – Catchpoint Systems

Besides knowing which optimization tools to test, we need to know which tests to use. This guide is focused on Google’s PageSpeed Insights test and may be the main reason you are reading this. But, other useful tests exist with different features and benefits and I wil list my favorites.

Different Types of Tests

Testing your WordPress site involves four main types of tests.

First, speed benchmarking services test the actual speed or loading time of your site. Sometimes you can specify the geographical location and sometimes get averages over various attempts and distances. Because of these differences, results will often vary—sometimes significantly—when using multiple tests.

The second type of test provides a grade score (either numeric or alphabetical). These tests generally measure the quality and/or efficiency of the design and coding of your site. This is important because there is a correlation between that score and the actual speed of your site. Still, having a better grade than another site does not guarantee that your site is actually faster and the discrepancy can often be quite large. So, while grading tools are useful for giving you an idea of what you can do to get lower loading times, by themselves they are rarely sufficient to tell you about actual speed performance.

Of course, some tests cover speed and performance. Helpful features to look for include: number of requests and page size; archived results (so you can go back and look at older results as a baseline); a waterfall showing how long each individual request on the page took; a display of file sizes, load times and other details about every element of a page; the ability to assess the performance of your website from different locations in the world; resource diagrams; a breakdown of your CSS and JS usage (inline vs. external, size); and a measure of how your site compares with the countless others previously tested.

The third type of test—a load test—attempts to evaluate how your site will respond under heavy use. It typically does this by having one or more computers in one or more locations emulate multiple users trying to use your site over limited periods of time. For these tests, the quality and nature of your hosting provider will often be more important than your specific WordPress site configuration. Load testing services are usually not free. They are important but, unfortunately, beyond the scope of this article. Still, if you are interested in using them, some popular considerations include blitz.io, loader.io, Loadstorm, Siege and ApacheBench (ab).

Finally, there are specialty tests that focus on specific elements that affect performance and there are tools you can use from your computer or browser. I will list useful specialty tests after I list the popular primary tests.

PageSpeed Insights and Other Google Tests

PageSpeed Insights (PSI)

PSI is a test that you cannot ignore and its results are often an eye opener. PSI results also offer a great starting point if you are just beginning to optimize your site. It is also the only test I am aware of that provides separate mobile and desktop performance scores and tips. This is especially useful because functionality and usability are often different for the two and therefore so is—potentially—performance.

In 2018 Google made big changes to PageSpeed Insights. Specifically, it switched to using Lighthouse for it’s lab data. Lighthouse simulates a page load on mobile networks with mid-tier devices. Thus, its results can differ significantly from older versions of PSI. In addition to performance results that PSI always provided, Lighthouse adds tests for accessibility, progressive web apps, SEO, and best practices.

Google’s Measure tool

PageSpeed Insights only shows Lighthouse’s performance assessment of your site. You can, however, use Google’s Measure tool to see the rest of the Lighthouse assessments.

Chrome User Experience Report (CrUX)

While lab data is useful, PageSpeed Insights also integrates field data from the Chrome User Experience Report (CrUX). CrUX is based on a set of historical stats about how a specific page has performed in the real world. It uses anonymized performance data from real users who visited the page from different devices and network conditions. These CrUX results are usually only available for popular websites, however.

Lighthouse in Chrome

In addition to powering PageSpeed Insights, you can also run Lighthouse directly from the Chrome browser. Click the three dots at the top right, choose the “More tools” option and then “Developer tools.” Next, click on the “Audits” option at the top and you’ll see the lighthouse logo with a “Generate report” button. Alternatively, use the Lighthouse Chrome Extension.

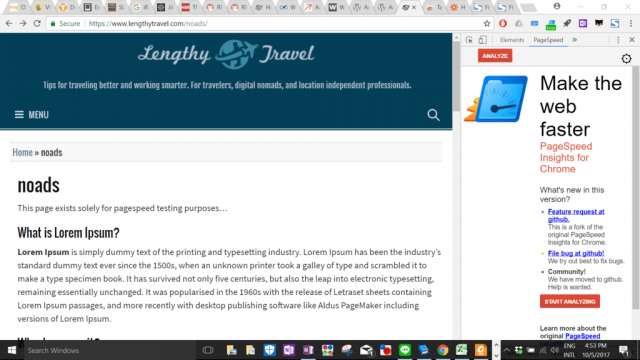

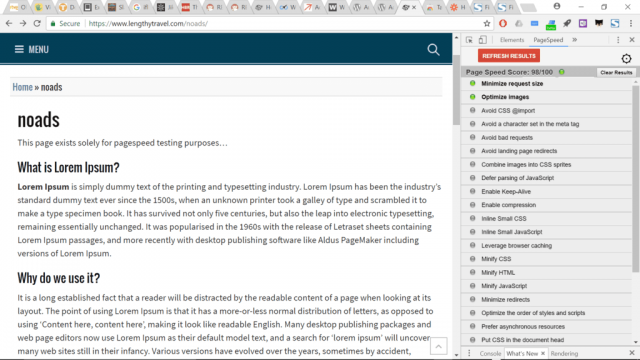

PageSpeed Insights Chrome Extension

The Google PageSpeed Insights Extension for Chrome is great for several reasons. First, you can use it to test a development site, especially one not accessible on the Internet (check out my tips for creating a development site with XAMPP). Second, you don’t have to visit the PSI website, which can be slow and requires 30 seconds between tests. It also makes testing many pages much easier than doing so via the website. Finally, it produces a nice layout of results with detailed breakdowns of all the issues covered.

Google Mobile-Friendly Test

Mobile-Friendly Test analyzes exactly how mobile-friendly your site is, and focuses on elements beyond speed as well. Google’s Search Console also offers a mobile usability report.

Other Popular Page Speed Tests

I think most of us tend to work through a progression on the page speed optimization learning curve. The best way to do that is to get into the result details provided by the alternatives to PageSpeed Insights. Naturally, there is a lot of overlap but each of the tools below provides at least one test or feature that distinguishes it from the rest. If you are a real page speed warrior, maximizing your scores for all of the tests will test your mettle.

GTmetrix

The big selling point for GTmetrix is that it performs two tests: page speed and YSlow. YSlow almost always gives a worse performance score because it tests some things most other tests don’t, especially the use of a CDN. You cannot specify a geographical region, but it will report what region (and browser) it used. I also like the presentation of results for this test site, giving each individual result a score out of 100. Results also get color-coded and sorted to easily spot the biggest problem areas. Click any recommendation and you will get more details and a link to an article explaining the test.

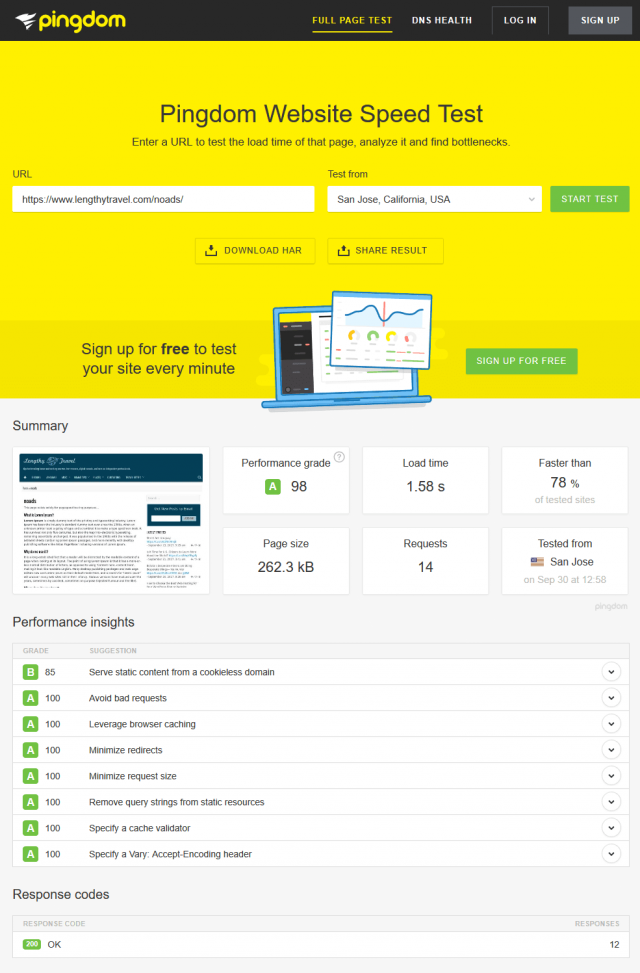

Pingdom

Pingdom is very popular and lets you test from three different geographic locations. It also seems to generate warnings that other tests don’t. Its one-page display of results is nice; each test result gets a color, letter grade and score out of 100. Finaly, it tells you what percentage of sites yours is faster than. One thing I don’t like about it is the lack of detail on any individual warning.

WebPageTest

WebPageTest is another good testing service. One thing that sets it apart is that it tests your site three times instead of just once. You can also choose the geographical location, browser type and even the connection type/speed. Basic results are given a letter grade for six categories, including use of a CDN. Detailed results are also available, but you have to click through to see those on separate pages. FYI, Moz has a useful guide to Web performance using WebPageTest.

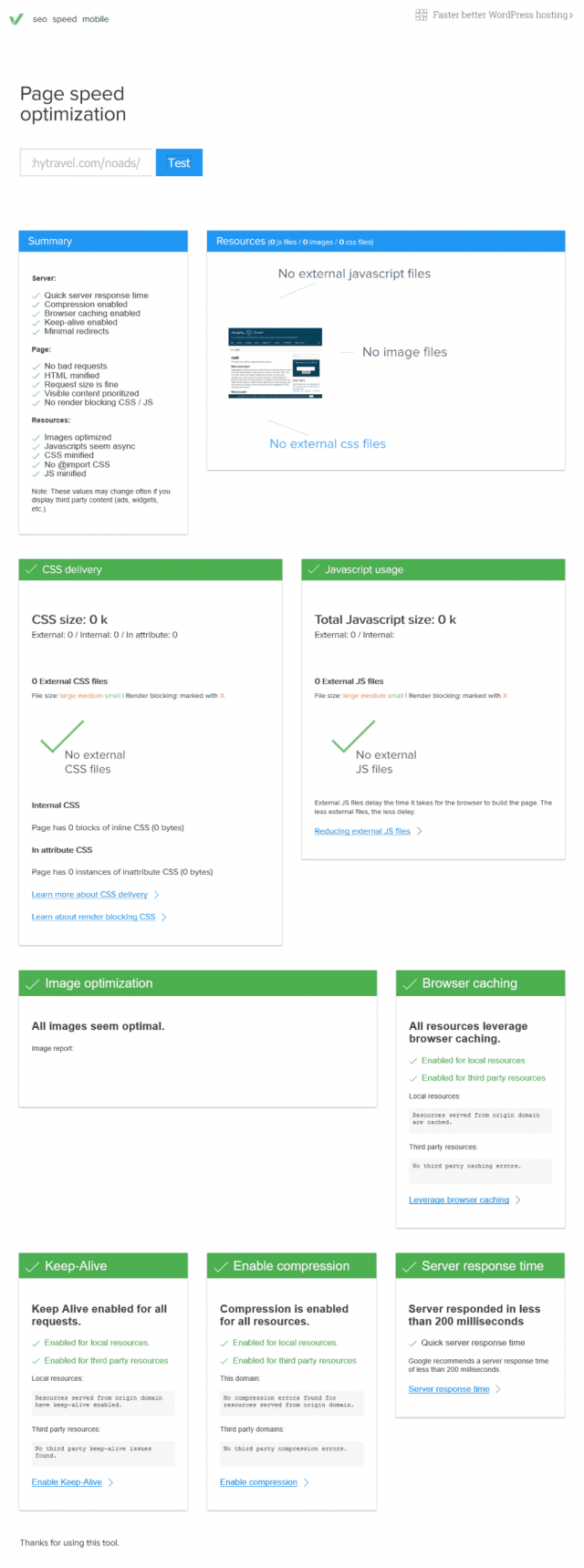

Varvy

Varvy offers two testing tools: Page speed optimization and SEO (which also includes a page speed component). I don’t think the page speed test actually provides anything of value that the other tests don’t, but the SEO test (which is the test available on the home page) is good for testing compliance with a lot of Google guidelines (some speed related, some SEO). The site generally provides excellent articles explaining each test.

More Page Speed Test Services

The online testing services I listed above are the most popular and the ones I primarily use. There are others, however, that might be worth considering. Here are the ones I have read good things about but not really used enough to recommend for or against.

DareBoost

DareBoost. In older comments below, Damien from DareBoost recommended his tool. He claims it offers some checks other tools don’t, including a speed index, checking for duplicate scripts, checking performance best practices in your jQuery code, performance timings (not sure what he means by that), SEO, compliance, security, etc. You can do five tests without registration, though it will be necessary for some features. You also have to share via social media to download a PDF of the report generated. Personally, I don’t find the test to add much value in terms of speed issues but the very extensive list of specific SEO, security, accessibility and quality issues definitely makes it worth adding this to your suite of testing tools.

GeekFlare Website Performance Audit

GeekFlare’s Website Performance Audit produces results similar to PageSpeed Insights. They may even be reproducing PSI (Lighthouse) results directly, though I did recognize some tests that seem to be independent. Regardless, they cover more than just speed measurements and the presentation of results is quite good.

PageSpeedGrader

PageSpeedGrader is a pretty simple test that shows you an overall grade out of 100. It also shows more results with only the recommendations for improvement shown on the core results page (there are tabs for successful tests, a timeline, and requests made in order to load the page).

PageSpeed Insights Monitoring

Most of the page speed testing sites are free but also offer paid plans to regularly monitor your site. I’m a bit cheap for that so I wondered if there is some way to do my own regular testing. Read about the three different automated PageSpeed Insights testing solutions I found and/or created.

Server Response Codes and Headers

When you visit a website, you see the content on the page, but your browser sees extra information. This information is found in HTTP header fields. Mostly these are set by your server but sometimes you can set additional ones via an .htaccess file or with PHP coding but that’s getting into some pretty advanced territory.

Generally, I wouldn’t worry much about changing your site’s headers, but it is instructive to know what they are and there are some useful tools for that, including REDbot, HTTP Status Code Checker, and HTTP / HTTPS Header Check, with REDbot being the most useful. It will point out common problems and suggest improvements. Although it is not a HTTP conformance tester, it can find a number of HTTP-related issues. REDbot interacts with the resource at the provided URL to check for a large number of common HTTP problems, including: invalid syntax in headers, ill-formed messages (e.g., bad chunking, incorrect content-length), incorrect GZIP encoding, and missing headers. Additionally, it will tell how well your resource supports a number of HTTP features.

Other Specialized Tools and Tests

Some other specialized tools can help as you slog your way through your efforts, including:

- Page Weight is a free tool provided by ImgIX to drive sales of its online cloud image optimization service. The results for my limited testing were impressive. All you do is paste a URL into the site and it will analyze the overall weight of the page and what percentage is due to images. If you can effectively compress any of those images, it will indicate that. You can even download a compressed copy of the worst offender.

- The WAVE Web Accessibility Tool will tell you how well people with physical impairments can use your site.

- MX Toolbox tests lots of different things, including DNS and mail server setup, presence on spam blacklists, etc.

- GiftOfSpeed, Check GZIP Compression, GIDZipTest, and REDbot all let you check if you have gzip compression working. REDbot also checks browser caching.

- Geekflare has an awesome collection of free tools you can use to test and troubleshoot things on your website. For example, the TTFB tool is simple, quick, and lets you see how fast (low) your time to first byte is from three locations around the globe.

- last-modified.com tests to see if your server is properly setting the

If-Modified-Sinceheader. - KeyCDN’s Brotli Test page checks if your site has Brotli compression enabled.

- Learn to love Chrome’s Developer Tools. I already mentioned how useful it is for finding potential JavaScript problems. The Inspect Element feature (which is also available in Firefox) is great for working on CSS issues. There is also a Network section that will show you where your site is using time/resources. This can help point to possible ways you can improve. And, if you use the recommended PageSpeed Insights or Lighthouse extensions, you accessed them from a Developer Tools menu.

- The HTTP/2 and SPDY indicator extension for Chrome shows a lightning icon in the address bar. It’s blue if the page supports HTTP/2, green if the page supports SPDY, and gray if neither is available. If enabled, you can click on the icon to get a variety of detailed information about the connections.

- Varvy’s JavaScript usage tool examines how a page uses JavaScript. Its CSS delivery tool gives you an overview of how your page uses CSS.

- HTTP Status Code Checker and HTTP / HTTPS Header Check check status codes and HTTP response headers that the web server returns when requesting a URL.

Testing Thoughts and Tips

Page Speed Testing = Fuzzy

Regardless of which test(s) you decide to use, you should consider any results as a bit fuzzy. What I mean is that you can run certain tests several times and get slightly different results. This can relate to how busy your server was when the test ran, something specific to the testing server, etc. Don’t worry about it too much. The key is to look for large changes and the appearance or disappearance of specific warnings. Just run each test multiple times if you suspect something strange has happened.

Discrepancy between Speed and Performance

It is possible to have a high performance score and slow site speed and vice versa. This is especially noticeable if you employ a caching solution but don’t take any other steps to improve the performance items generally tested for. Alternatively, you could have a well-optimized site but one with large page size and/or poor server performance. The tools mentioned above that test both speed and performance are useful for seeing this discrepancy if it exists.

Testing Times and Locations

When possible, try both geographically close and distant test locations to see the load time differences for your site. You should also test more than once because multiple tests can show different loading time results. You also might want to try testing at a different time as perhaps the fluctuation is due to server load.

Testing Without Ads

Google AdSense penalizes PageSpeed Insights scores so you will want a way to test your site with it disabled. Fortunately, some ad plugins provide an easy way to not display ads on a per-page basis or based on some filtering criteria. I use and highly recommend Ad Inserter, which offers a variety of filter options to accomplish this. With Ad Inserter installed, there are two ideas worth considering:

- Create a page and/or post to use just for testing purposes. For my sites, I created a page called noads. I used a Lorem Ipsum text filler. A test page is also very helpful if you have multiple websites you are trying to optimize as you can sort of compare apples to apples

- Use the query string filter to block ads on any existing page. So, for example, you can filter with a query string of ‘noads’ (e.g., domain.com/?noads=true). This is a great idea because it allows you to easily test any page on your site. There are two potential downsides, however. First, the presence of query strings is actually a warning on some of the testing sites (especially Pingdom). Second, your caching solution may not support caching URLs with query strings or may have the option disabled. If the latter, enable it, at least while you test.

Testing with Caching

If you are making iterative site changes some page speed test sites may not notice those changes due to caching. For plugin-based caching, you can purge the cache, either just for the page you are testing or for the entire site, depending on your caching plugin. If you have server-based caching, you may have no recourse but to wait until a later time/date to re-test or to test a different page. If you are using Cloudflare, don’t forget to purge its cache and put it into development mode.

PageSpeed Insights Caching

Google caches its PageSpeed Insights results for several minutes. This can become a small gotcha when testing the effectiveness of your optimization changes. Just be sure to wait a few minutes for Google to clear its cache between testing your pre- and post-change site.

Understanding Speed Factors

Before starting with actual optimization work let’s understand at a high level what factors actually affect site speed. The number of factors that come into play is long and this list probably misses some, but here are things to consider:

- Type of server: WordPress optimized, dedicated, VPS, shared, cloud, SSD or disk storage, amount of RAM, etc.

- Type of server OS (linux vs. windows; Nginx vs. Apache vs. LiteSpeed)

- Use of server-based caching and acceleration tools like Memcached, OpCache, nginx caching, and LiteSpeed Cache.

- Use of plugin-based caching like W3 Total Cache, WP Fastest Cache, WP Rocket, and WP Super Cache.

- HTTP vs. HTTPS

- HTTP/2

- Nature of your permalink structure (flat vs deeply nested)

- Amount of dynamic content and/or database (DB) queries required by your site. This is a function of the theme and plugins you use as well as WordPress itself.

- Images: number, size and whether they are optimized or not

- The use of video or other rich media

- Overall size of your pages (HTML, CSS, JS, fonts, images)

- Different types of pages. Your score will vary from page to page for various reasons, including the fact that certain pages use plugins that other pages don’t use, some pages are more image heavy, etc. One big gotcha to beware of involves pages—like e-commerce pages—that you exclude from caching.

- Using (or not) a content delivery network (CDN)

- DNS configuration

- Use of redirects (usually 301, which are legitimate and should not be eliminated without good reason, but can slow down your site)

- Theme issues. The theme you choose will impact on your site performance. If it is well written (uses few or no external scripts, uses only optimized images, minimizes database queries, etc.) you should have no real problems. WordPress makes it easy to add and switch themes, so if you are wondering how yours performs, test it against some others.

One reason WordPress is so popular and so powerful is because it is modular and flexible, thanks to plugins. Unfortunately, the price to this flexibility is often a slower site.

I use almost 50 different plugins on my site! That may be a lot and perhaps I could get by with less, but what impact does having this many plugins actually have? While many will admonish against using too many plugins, they are not per se bad. Where they can cause problems is when they are: (1) poorly coded, (2) reference external scripts (CSS, JS), and (3) make extensive DB requests.

Of course, it is always a good idea when choosing plugins to ask yourself if you really need the functionality it provides and what impact it might possibly have on your site performance. If you add a new plugin after optimizing your site, be sure to re-run your performance tests to see what impact, if any, it had.

There are also ways you can modify your theme’s functions file to remove any negative effect a plugin might have on of your site’s performance. I will illustrate a few of these later but you may also want to read the useful article, “How WordPress Plugins Affect Your Site’s Load Time.”

My biggest recommendation is that you choose your social sharing plugin very carefully. These can be particularly important to your site speed and performance scores.

Note: If a plugin adds design elements to your page, make sure it is responsive for mobile screens since it makes no sense to have a responsive theme that includes non-responsive content added by a plugin.

Web Hosting and PageSpeed Insights

The quality of your hosting setup—notably the server configuration and the traffic load it faces—can significantly impact the Reduce server response times (TTFB) PageSpeed Insights audit.

I have previously achieved a perfect PageSpeed Insights score with a shared server running Apache but that is not easy to do so. Since I first wrote this guide I have moved my sites to new hosting providers. I am still using shared servers, but my new providers have faster operating systems, better server-level caching, and more strict resource limits on accounts (making for a “safer” server neighborhood).

My sites still fail the TTFB test frequently (it’s hit or miss), which is annoying. I am mostly happy with both my current providers, SiteGround and TMDHosting, and recommend either of them. I am thinking of switching one or both of them since their renewal is due soon and I am curious to test out some competitors. If I do, I will update the status of their performance, especially in terms of TTFB.

Social Sharing Plugins Hurt Your PageSpeed Insights Score

Frank Goossen discussed how Social sharing can significantly slow down your website in a post back in 2012. I am sure the various plugins he considered have changed since then and many more were not tested, but the concept remains true today, which is that social sharing widgets slow webpage loading and rendering down as they usually include third party tracking for behavioral marketing purposes. Ultimately, you have to decide how important having social sharing buttons is to you.

Assuming you want social sharing, there are options that won’t kill your page speed, but they likely won’t look the best or have all the bells and whistles. For what it’s worth, I have long used Simple Share Buttons Adder. I don’t recommend it since it was acquired by ShareThis and they added a lot of tracking and other scripts. Now it is incredibly bloated (read the countless bad reviews since the acquisition). I dequeue all those annoying new scripts so I am still using an older version of it, but you should probably look elsewhere.

Page Speed Optimization Categories

Now that we understand what kinds of things affect page speed, let’s consider how to address them. Later, I will talk specifics but now I am thinking big picture. One useful framework , known by the acronym PRPL, describes four categories of optimization efforts:

- Push (or preload) the most important resources

- Render the initial above the fold content (the portion of the webpage that is visible without scrolling) as soon as possible

- Pre-cache remaining assets

- Lazy load other resources and non-critical assets

Getting a little more specific, but not in the weeds yet, the following are common types of page speed optimizations:

- Caching (server and client)

- Optimizing and compressing images

- Minifying and compressing code

- Combining (or splitting) JavaScript and CSS files

- Deferring scripts that aren’t needed above the fold

- Inlining code that is needed above the fold

- Geographically distributing content and resources closer to the user (CDN)

- Self-hosting third-party scripts

- Preloading scripts needed above the fold

- Adaptive serving of resources based on the user’s device or network conditions

A CDN is a network of servers around the world which cache a copy of the static parts of your site and serve these to your site’s visitors from the server closest to their location, thus speeding up page loading time. Most CDNs—like the popular StackPath (formerly MaxCDN) and Amazon CloudFront—are paid products, but CloudFlare offers a good free plan. Some caching plugins only play nice with certain CDNs and some might not play nice with any. Be sure to read the documentation for both plugin and CDN.

If your cache plugin and your CDN both offer extra features, like minification, make sure to only use one. As with my advice for caching, I recommend you test a CDN last.

Be aware that some tests—notably WebPagetest and GTmetrix—account for the use of a CDN and some—notably Google’s PageSpeed Insights—don’t. Interestingly, a CDN can potentially make your site marginally slower on a “local” level but make it much faster when tested around the world.

A Methodology for Testing Optimization Plugins

Site optimization is a real challenge and there is no magic formula. The nature of your “solution” will depend on the speed factors identified above, the different tools and services you choose to use and whether or not you can do some light or heavy lifting (via custom coding) yourself. You goals will also play an important role. Are you looking for a simple solution (say, via one of two plugins) or are you comfortable mixing and matching tools? What score are you willing to accept?

I have found a great combination of manual tweaks and plugins and I will share those with you. But, here’s the thing. Your site is completely different than mine or anyone else’s. So, your challenges will be different as well. And, frankly, there are a lot of tools to choose from. The fact that I am not using a tool means fairly little—it might be a perfect solution for your site. Because of this, the best strategy is to develop a structured method for trying and comparing different tools. My recommended methodology is to first test available plugins and then make manual changes. Here is a step-by-step breakdown of the process.

Step 0: Backup Your Site and Use a Development Platform

Before starting, make sure your site is exactly the way you want it. That means configuring and deploying every plugin, every theme design tweak, every external service. Any change you make to your site can and will affect your optimization results. Thus, there is no point in testing before you are really ready.

Some of the plugins and changes you are going to investigate have the potential to screw up your site. Having a backup that can restore your site to its original, working state is a must. There are a lot of good backup plugins but my favorite is UpdraftPlus Backup and Restoration.

While having a backup is a must, the safest way to experiment with your site is with a development version. This requires a bit of effort and, if you install one on your personal computer you won’t be able to perform many online tests with it because they need a working URL (though using the Google PageSpeed Insights Extension for Chrome and/or Lighthouse from inside the Chrome Developer tools should work). See my guide for setting up a development version of your WordPress site.

Step 1: Create a Child Theme

The very first thing you should do—if you haven’t already—is create and activate a child theme. This might sound intimidating but it really is quite easy to do. If you plan to make any manual changes, it is absolutely necessary because every theme update will destroy any previous changes. I won’t go into details about this topic, but you can find everything you need to know via the official WordPress tutorial on how to create a child theme.

Step 2: Backup Again

If anything goes wrong with your child theme you can revert to your previous backup. Assuming everything goes smoothly, make another backup so you don’t have to repeat Step 1 if you need to restore a backup again later in the testing process.

Step 3: Decide Which Tests to Use

Choose the tests you want to use of those I identified earlier. I use PageSpeed Insights, Pingdom, WebPagetest and GTmetrix.

Step 4: Create a Spreadsheet to Track Your Work

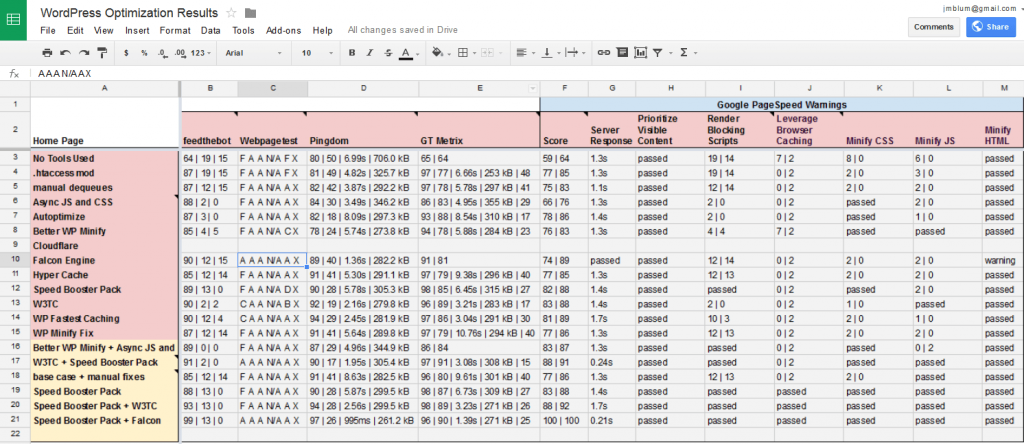

If you are comfortable with spreadsheets, create one that tracks the tests you have chosen and the tools you choose. In one column list all the tools you try (include the base case you will run in the next step). Since many tools have multiple options, you may want to consider listing different tests with different settings. Just add a new row for each. In the top row, list the scores and the possible warnings you can get from the testing tool.

As you test, use the spreadsheet’s notes feature to make any relevant comments about a plugin. It is easy to forget things in the midst of so much testing, so notes are useful.

To make things easier, feel free to use this public Google spreadsheet I created based on my own testing.

Step 5: Get a Baseline Measure

Before choosing any tools to test or making any changes to your new child theme, test your site so you can track improvement and see what problems you need to address. Add the results to your spreadsheet on the first row.

Step 6: Enable Compression and Leverage Browser Caching

Two warnings you will most likely see in your base case test are “Enable text compression” and “Serve static assets with an efficient cache policy.” Some plugins can fix these errors, but you can also fix them easily by manually updating your .htaccess file. Simply add the following lines to the top:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | ################### Activate compression ###### AddOutputFilterByType DEFLATE text/plain AddOutputFilterByType DEFLATE text/html AddOutputFilterByType DEFLATE text/xml AddOutputFilterByType DEFLATE text/css AddOutputFilterByType DEFLATE application/xml AddOutputFilterByType DEFLATE application/xhtml+xml AddOutputFilterByType DEFLATE application/rss+xml AddOutputFilterByType DEFLATE application/javascript AddOutputFilterByType DEFLATE application/x-javascript ################# Activate browser caching ###### ExpiresActive On ExpiresDefault "access plus 1 month 1 days" ExpiresByType text/html "access plus 1 month 1 days" ExpiresByType image/gif "access plus 1 month 1 days" ExpiresByType image/jpeg "access plus 1 month 1 days" ExpiresByType image/png "access plus 1 month 1 days" ExpiresByType text/css "access plus 1 month 1 days" ExpiresByType text/javascript "access plus 1 month 1 week" ExpiresByType application/x-javascript "access plus 1 month 1 days" ExpiresByType text/xml "access plus 1 seconds" |

Fixing just these two warnings will make a fairly significant improvement to your speed and performance results. Thus, the plugins that do this for you will probably seem much better than others. For this reason, I prefer the manual fix and then I consider the new scores the base case. I think this gives me a better feeling for how much improvement the different plugins I try are making.

Step 7: Choose Your Tools

Now it is time to add some optimizations. These can usually be done via plugins and/or by modifying your theme. If you have been doing research you may already have a list of things you want to try. If not, I will list many throughout this guide. In either case you will probably want to consider solutions based on the page speed optimization categories I listed earlier.

Step 8: Organize and Prioritize Your Tests

The order you test your selected tools is not too important, but as I mentioned earlier I recommend testing non-caching options first because when you use a caching plugin you need to clear the cache anytime you make a change you wish to test.

I would prioritize simpler plugins first. If a plugin does only one thing and has few options, it will be easier to test and will give you a pretty immediate idea of its effectiveness. If you are going to test more complex plugins that contain the same functionality as a simpler plugin, consider testing just that functionality separately from all options combined.

You can test various plugins without fully understanding what they do, but the more you understand the better, especially because some options are fairly safe to use and some can cause major problems for your site. With this in mind, before you test any plugin, take time to understand its functions and options. Where appropriate, test different functions and combinations thereof separately. Enter every test you plan to run on its own row in your tracking spreadsheet. Include multiple rows covering various plugin options.

It will be tempting to jump ahead and start testing multiple plugins together. Stay disciplined and avoid this temptation. After you have all your individual plugin results you can review them and see which combinations of tools might solve the most warnings possible. Track these combinations on separate rows of your spreadsheet. If you use my public template you will see I have used different colors for individual plugins and combinations.

Note that some plugins can work together and others cannot. You will have to figure this out for your situation but generally, two caching plugins won’t work together. Also, two plugins that can both alter JavaScript or CSS probably won’t work together unless you use one for JS and the other for CSS.

Step 9: Test and Track (with Tips and Warnings)

Now, run your tests and enter the results in your spreadsheet. As you run more tests, hopefully you will start to learn a lot more about your theme and the plugins you use and how they might be affecting your performance. You may even decide to drop or change some plugins. Likewise, you will start to better understand the performance factors that PageSpeed Insights and other tests consider. You may also decide that you should test combinations of plugins and options you hadn’t previously considered. Just test them and add the results to your spreadsheet as you do.

To be thorough, you should test multiple pages. A home page or blog index page will typically be different from a post. And posts with many images and videos will perform differently than basic posts. Likewise, custom pages, search results, archive pages, etc. will all potentially perform differently and you should test them separately.

It may seem overkill to test multiple URLs separately, but it is the most accurate way of testing performance as different plugins handle different types of pages in different ways. Of course, being realistic, that is a lot of work. A compromise is to test one or two pages and later in the process as you narrow down your final tool choices go back and test more pages.

Speaking of being thorough, you should check to make sure a plugin doesn’t break your site. Like testing multiple pages, this can be a pain, so focus on one or two pages and then check more thoroughly later in the process after you narrow your plugin selection. When you do check, pay special attention to any features created by plugins. For example, if you use a ratings plugin or page view tracking plugin, those functions may be broken. Ad management plugins may or may not work properly, etc. Most servers create error logs (sometimes, conveniently, in the root and/or theme folder). Find out where yours is located and see if it has any errors listed.

Here are some other thing things to consider as you activate plugins and run your tests.

- Google is really strict about image optimization warnings. Even if you use a great plugin like EWWW Image Optimizer to optimize all your images, PageSpeed Insights will may not consider your efforts to be good enough. So, in your base case you will probably see an image optimization warning from the PageSpeed Insights test. Whether you want to fix these issues before continuing your tests or do so after is a personal decision. I opted for doing so later, but I think I should have done so at the beginning.

- A common responsive design technique is to change the navigation menu dynamically as the screen size changes. Usually this works just fine, but it is possible that some of the things you will do to try to optimize speed might break it so after making tweaks always resize your browser to make sure the responsive navigation and design is still working well.

- If you have member-only content, make sure you test that separately. Take special care to make sure that any caching presents both non-member and member views properly.

Sometimes while testing a plugin you may learn something useful that leads you to implement a manual tweak. This tweak may be specific to that plugin but it also might be a change you want to make in all circumstances. In the latter case, to be thorough you should repeat the tests you have already made, this time including the new manual change.

Step 10: Choose Your Final Plugin(s)

After you finish your plugin testing you should hopefully have a combination of plugins and their respective configurations that performs best for your site. Activate this combination and remove all the plugins you decide not to use.

Step 11: Manual Tweaks (Tackling Google PageSpeed Insights Tests)

You now have your preferred combination of plugins but almost certainly you have not gotten your 100 score yet. The only option left to close the gap is to make some manual tweaks. If you are satisfied with where you are now, feel free to call it a day. If not, read on for a look at the specific tests PageSpeed Insights performs and recommended coding changes you can make to fix warnings given by them. I will provide the necessary code and instruction so I think anyone can implement these. Leave a comment if anything I discuss is not clear enough.

Optimization: Function vs. Presentation

Throughout the guide I will offer some suggested code samples (snippets) to help optimize your site. I will generally talk about placing these in your child theme’s functions.php file. That is common practice and works well, but common practice isn’t necessarily best practice.

Best practice is to use themes only to control presentation—that is the look and feel—of a site. To make back end changes that improve performance but don’t alter the site’s presentation, you should use a custom plugin. That way, if you ever change to a different theme, you won’t have to port those page speed modifications to it. And, by that time you may have forgotten the how, what, and why of those changes. (hint: always generously comment your coding work)

Creating a plugin is not difficult but is beyond the scope of this article. As an alternative, you could try the Code Snippets plugin. I haven’t used it myself but it seems to have some good features and might help you keep all your various modifications organized.

Stages of Page Speed Optimization

It seems that people progress in stages in their site performance optimization efforts, as follows.

- Only worry about Google’s PageSpeed Insights test and pick the low-hanging fruit (compression, browser caching, page caching).

- Still focus primarily on PageSpeed Insights but try to use extra tools and techniques to get more performance.

- Start to worry about multiple test sites, but only for the “big picture.”

- Start to notice the more detailed warnings spelled out in the various testing sites.

Finally, another key change point is whether you focus your optimization efforts on all pages or just the home page. That change could happen at any stage but a lot of people focus on just one page.

Understanding WordPress: Tackling Google PageSpeed Insights with Code Changes

Most of the manual coding changes I use involve working around issues with external scripts so I will first discuss at a high level how WordPress handles these by using the concepts of enqueuing/dequeuing and action and filter hooks.

When I (and others) talk about including code from a file other than the main HTML file loaded in your browser, I will often use the words script, file and resource interchangeably. A script should really only refer to a JavaScript file but common practice across the Web has it often used to refer to a CSS file as well. Likewise, an external resource is either kind of file.

Queuing Resources

Queuing is one of the most important concepts to understand when you want to really master WordPress. I doubt that is your goal, but since it is something we need to use for many of our manual performance tweaks, let me try to explain it.

Resources

Every theme and plugin potentially can include two types of resources, styles (CSS files) and JavaScripts. The core WordPress program has some as well (notably, jQuery). This system helps make WordPress very flexible and powerful but the problem is how to know what order to load all these resources. In some cases, any order might do just fine, but often one resource relies on another loading before it. Another concern is making sure that the same resource doesn’t load more than once. This can happen when two plugins use the same JavaScript file, which is especially the case with widely used jQuery.

In the old days—and, unfortunately, occasionally still today—developers would just write the code needed to include a file directly in their plugin functions. When done this way, WordPress—and other plugin developers—have no control over the order or nature of loading that resource. You can imagine the trouble that would result if every theme and plugin developer did this.

Enqueue

As a solution, WordPress has declared that the proper way to load a resource is by something called enqueuing. WordPress uses this system to create a “queue” of external files. It decides where to place a script in this queue with two enqueue method specifications.

A dependency specification tells WordPress what other scripts to load first. A priority specification determines if an external resource loads after or before any set with the default; a default priority is 10 so if you set a priority to be higher WordPress will load it earlier. Sometimes you can ignore both of these, but if your code requires that another resource load before you load one of your own, using one or both of these specifications is a good way to do that.

Dequeue

The WordPress developers also created a way to dequeue resources. This is crucial because it lets you “fix” problems that might occur when a plugin you are using has enqueued a resource in a way that adversely affects your site’s performance. Simply dequeue the problem resource and re-enqueue it with proper dependency or priority.

Later, we’ll see that some plugins and themes enqueue scripts on every page even though they may not be used at all or may be used only on certain pages. This is obviously not optimal and, thanks to the dequeue method we can fix this inefficiency.

Register vs. Enqueue

Technically speaking, there are two steps to enqueuing: first you register a resource then you load it. I won’t go into details about this but just know that enqueuing actually takes care of both registering and loading. The difference exists because there are some cases where you might want to register (declare) an external resource but actually load it in a different place. That is beyond the scope of this guide so don’t worry about it.

Handles

The last important thing to know about enqueuing in WordPress is the idea of a handle. Basically, this is just a nickname given for the script. Whenever you enqueue a script you need to specify a handle and the exact location of that script. Why both? Mostly for convenience. As I just mentioned, you can register and load a file separately. If you have already registered a script, WordPress knows its location so when you are ready to load it you can just reference the handle. Likewise, if you want to dequeue and re-enqueue a script, knowing the handle is enough.

List All Handles

So, how do we find handles used for our site? There are various ways to do this. Probably the easiest is to use a plugin like Debug This or Query Monitor. If you prefer a solution that doesn’t require a plugin, the following code—modified from code by the author of the Debug Objects plugin (which is no longer maintained)—in your child theme functions file will do the trick.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 | // -------------------------------------------------------------------------- // --- List all relevant handles (only for admin level) // -------------------------------------------------------------------------- function wp_list_enqueued( $handles = array() ) { global $wp_scripts, $wp_styles; ?> <style> TABLE { width:98%; margin:0 auto; } TD { background:#fff; } .toprow { background:#707070; font-weight:bold; } .style { background:#ddd; } .script { background:#e9e9e9; } </style> <?php // --- only show to logged-in admin if ( current_user_can( 'edit_posts' ) ){ // --- scripts foreach ( $wp_scripts -> registered as $registered ) $script_urls[ $registered -> handle ] = $registered -> src; // --- styles foreach ( $wp_styles -> registered as $registered ) $style_urls[ $registered -> handle ] = $registered -> src; // --- if empty if ( empty( $handles ) ) { $handles = array_merge( $wp_scripts -> queue, $wp_styles -> queue ); array_values( $handles ); } // --- output of values $scriptnum = $sytlenm = 0; $output = '<table>'; $output .= '<tr><td class="toprow">Order</td><td class="toprow">Handle</td><td class="toprow">URL</td></tr>'; foreach ( $handles as $handle ) { $output .= '<tr>'; if ( ! empty( $script_urls[ $handle ] ) ) { $scriptnum++; $output .= '<td class="script">' . $scriptnum . '</td><td class="script">' . $handle . '</td><td class="script">' . $script_urls[ $handle ] . '</td>'; } if ( ! empty( $style_urls[ $handle ] ) ) { $stylenum++; $output .= '<td class="style">' . $stylenum . '</td><td class="style">' . $handle . '</td><td class="style">' . $style_urls[ $handle ] . '</td>'; } $output .= '</tr>'; } $output .= '</table>'; echo $output; } } add_action( 'wp_print_footer_scripts', 'wp_list_enqueued' ); |

That will only list the handles (and file URLs) for a logged-in administrator so the whole world won’t see it. But, you still should remove or comment out this code once you have the information you need.

Finally, if you have a text editor that can search for text in multiple files (e.g., in a directory) like my favorite Notepad++, then just search for the filename you are concerned with. Somewhere you will see an enqueue command that will include the handle name.

Action and Filter Hooks

To really master WordPress, in addition to understanding enqueuing you also need to understand hooks. In fact, you cannot enqueue or dequeue a file without a hook. Again, I will just give the big picture.

According to the official WordPress site, hooks “are provided by WordPress to allow your plugin to ‘hook into’ the rest of WordPress; that is, to call functions in your plugin at specific times, and thereby set your plugin in motion.” There are two kinds of hooks:

Basically, an action lets you add or remove code whereas a filter lets you modify (replace) data. Sometimes either will work, but depending on what you want to accomplish one is preferred (or required).

Whether you are adding or removing code via an action or replacing data via a filter, WordPress needs to know when to execute your code. That is what the hooks are for. WordPress builds a page in multiple steps and each of these steps is a hook. The steps that probably make the most sense to you would be to first load the header of the page, then the content, then any sidebars and finally the footer, but there are actually many more hooks that developers rely on.

Add_action and Add_filter

The add_action and add_filter commands implement actions and filters, respectively. These commands use some parameters, most notably the actual hook to use, the name of the function you wish to run, and the priority. In the code I presented above for listing handles, the last line is:

1 | add_action( 'wp_print_footer_scripts', 'wp_list_enqueued' ); |

This is an example of using the add_action command. Here I am omitting the priority parameter because it is optional and not needed for what I want to accomplish. But on the topic of priority, I should clarify that the hook you use to enqueue is its own sort of priority. A high priority parameter used on an early hook runs before a low priority parameter on a later hook. There are best practices for which hooks to use to enqueue scripts (usually the wp_enqueue_scripts, wp_print_scripts, and wp_print_styles hooks). Knowing which hooks the relevant scripts on your site use will help you make any manual enqueuing changes.

Looking again at the line of code above, wp_print_footer_scripts is the hook. This particular hook comes toward the end of the page building process (i.e., a very late step), which is what we want because we don’t want to miss any external scripts that are loaded in the footer (for reference, wp_footer is the hook immediately prior to wp_print_footer_scripts).

Don’t Worry If You Are a Bit Confused

Enqueuing and action/filter hooks are not easy concepts so if you got a bit lost in the last sections, don’t worry. Below, I will show you actual code changes I made that might also be useful for your site. Along the way, hopefully these concepts will start to make more sense.

Removing Unused Scripts and Styles

Themes and plugins shouldn’t include JavaScript and CSS files for unused features and functions but many do. These may or may not cause any PageSpeed Insights warnings, but to fully optimize your speed you should remove them. To do this, you will need to know the script handle(s).

For example, my theme offers a tab widget (popular posts, latest comments, etc.) that I don’t use. This widget relies on both a CSS file and a JavaScript file so I need to dequeue both. As it turns out, the handle for each is “theme_tab_widget.” I use the following code:

1 2 3 4 5 6 | function remove_assets() { // --- dequeue tab widget since I don't use it wp_dequeue_script( 'theme_tab_widget' ); wp_dequeue_style( 'theme_tab_widget' ); } add_action( 'wp_enqueue_scripts', 'remove_assets', 99999 ); |

Let’s take a quick look at what is happening here. Keep in mind the earlier discussion of action and filter hooks.

The first parameter in the add_action line is the action hook (wp_enqueue_scripts) that most theme and plugin developers use to enqueue their scripts. The second parameter is the remove_assets function which we created in the lines above the add_action command. The final parameter is the priority number. The default priority number for WordPress is 10 so using a number higher than that will run our function after all the default enqueuing finishes. That, of course, assumes that developers used the default. Some might set their own high value (say 20 or 99). So, we use a really high number (99999) just to be safe.

Note that we use two different wp_dequeue commands. wp_dequeue_script will dequeue a JavaScript file and wp_dequeue_style will dequeue a CSS file. My theme’s tab widget uses both but often you will only be dealing with one or the other.

My theme ended up including four features (8 total files) that I don’t use. I have only illustrated the tab widget feature, but you can just replicate the dequeue commands for other unnecessary scripts.

Limiting Scripts and Styles to Certain Pages: Contact Form 7

Contact Form 7 is probably the most popular contact form for WordPress. If you are like me, you only use it on your actual contact page but it loads its script by default on every page, which is wasteful. So, like above, let’s dequeue it—but add some code to make sure it does not dequeue on the contact page. In the same remove_assets function, add the following lines:

1 2 3 4 | // --- dequeue Contact Form 7 script on every page except contact page if ( !is_page("contact") ) { wp_dequeue_script( 'contact-form-7' ); } |

So the function now looks like:

1 2 3 4 5 6 7 8 9 10 11 | function remove_assets() { // --- dequeue tab widget since I don't use it wp_dequeue_script( 'theme_tab_widget' ); wp_dequeue_style( 'theme_tab_widget' ); // --- dequeue Contact Form 7 script on every page except contact page if ( !is_page("contact") ) { wp_dequeue_script( 'contact-form-7' ); } } add_action( 'wp_enqueue_scripts', 'remove_assets', 99999 ); |

The key here is you need to change “contact” to the permalink (page slug) for your contact page.

Dequeuing Tricky Scripts

One of my sites uses a pricing table plugin which was presenting me with two problems. First, it loads a JavaScript file on all pages even though I only use it on one page. Second—and I really don’t know why—this plugin’s CSS stylesheet was causing a warning from Google PageSpeed Insights.

Since the CSS involved is quite small, I decided to dequeue it and add the CSS styling to my main child stylesheet. And, the solution to the first problem is basically the same as for the Contact Form 7 script. But, in the end I was unable to dequeue either file.

After banging my head a bit I found out why. Remember my discussion of action hooks and how they form their own sort of priority? And, remember I said that the wp_enqueue_scripts action hook is the one most theme and plugin developers use to enqueue their scripts? Well, apparently the developer of this plugin enqueued the files in an action hook which occurs after wp_enqueue_scripts. The end result is that I couldn’t just include dequeue statements in my previous remove_assets function. Instead I needed a new function that calls via a later action hook, as follows:

1 2 3 4 5 6 7 8 9 10 | function remove_assets_footer_scripts() { // --- dequeue Easy Pricing Tables stylesheet wp_dequeue_style( 'dh-ptp-design1' ); // --- dequeue pricing table match height script on all but home page if ( stripos($title, "home") === FALSE ) { wp_dequeue_script( 'matchHeight' ); } } add_action( 'wp_print_footer_scripts', 'remove_assets_footer_scripts', 0 ); |

This looks a lot like the previous code but I have replaced wp_enqueue_scripts with wp_print_footer_scripts.

If you run into similar problems try this action hook. Or, more generally, look at the official WordPress list of action hooks. The first section, “Actions Run During a Typical Request” lists in order the action hooks. The most common action hooks I see referenced in online discussions are (in order of precedence):

setup_themeafter_setup_themeinit (Typically used by plugins to initialize. The current user is already authenticated by this time.)wp_enqueue_scriptswp_print_styleswp_print_scripts wp_print_footer_scripts

Localize External Scripts and Resources

Sometimes our sites rely on scripts and resources from external servers for functionality we want. Common examples are fonts, ads, analytics, social media sharing buttons, and Gravatars.

Loading resources from third-party servers reduces page speed and can trigger PageSpeed Insights warnings. The most likely problems will be with the Eliminate render-blocking resources, Ensure text remains visible during webfont load, and Serve static assets with an efficient cache policy audits.

A good solution is to make a local copy of the resource and access it directly from out server. There are two types of resource we need to treat differently: files that are unlikely to change and those that might change regularly. In both cases we make a copy of the external file and store and retrieve it from our child theme but in the latter case we will need code to regularly update the file.

Localize Google Fonts

My theme, like many others, uses a Google font for its default. Since a font is not something likely to change often (if ever), it is a good candidate for localization.

First, as with our unnecessary scripts (e.g., tab widget) above, we need to dequeue the external reference. In the remove_assets function from before, add the following line:

1 | wp_dequeue_style( 'theme-google-font-default' ); |

replacing theme-google-font-default with whatever handle your theme is using for its Google font. Now our code looks like:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | function remove_assets() { // --- dequeue tab widget since I don't use it wp_dequeue_script( 'theme_tab_widget' ); wp_dequeue_style( 'theme_tab_widget' ); // --- dequeue Contact Form 7 script on every page except contact page if ( !is_page("contact") ) { wp_dequeue_script( 'contact-form-7' ); } // --- dequeue default google font wp_dequeue_style( 'theme-google-font-default' ); } add_action( 'wp_enqueue_scripts', 'remove_assets', 99999 ); |

Creating a local copy is a bit more complicated, but not so bad. First, you need to visit the Google URL called by your theme. For example, my theme uses the Oswald font and loads it from:

If you visit that site you will see some @font-face code including the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | /* latin-ext */ @font-face { font-family: 'Oswald'; font-style: normal; font-weight: 400; src: url(https://fonts.gstatic.com/s/oswald/v31/TK3_WkUHHAIjg75cFRf3bXL8LICs1_FvsUhiZTaR.woff2) format('woff2'); unicode-range: U+0100-024F, U+0259, U+1E00-1EFF, U+2020, U+20A0-20AB, U+20AD-20CF, U+2113, U+2C60-2C7F, U+A720-A7FF; } /* latin */ @font-face { font-family: 'Oswald'; font-style: normal; font-weight: 400; src: url(https://fonts.gstatic.com/s/oswald/v31/TK3_WkUHHAIjg75cFRf3bXL8LICs1_FvsUZiZQ.woff2) format('woff2'); unicode-range: U+0000-00FF, U+0131, U+0152-0153, U+02BB-02BC, U+02C6, U+02DA, U+02DC, U+2000-206F, U+2074, U+20AC, U+2122, U+2191, U+2193, U+2212, U+2215, U+FEFF, U+FFFD; } |

We want to add this code to our child theme stylesheet. But, first notice that two different .woff2 files are referenced in that code. Since these are also on external servers we will get warnings for them if we leave the code as is. So, simply visit each of these URLs and download the respective files to your child theme folder. I put mine in a folder I call “Oswald,” which is inside the “fonts” folder.

Now, edit the above code to be like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | /* --- Theme's default Google font -- */ /* latin-ext */ @font-face { font-family: 'Oswald'; font-style: normal; font-weight: 400; src: url(fonts/Oswald/TK3_WkUHHAIjg75cFRf3bXL8LICs1_FvsUhiZTaR.woff2) format('woff2'); unicode-range: U+0100-024F, U+0259, U+1E00-1EFF, U+2020, U+20A0-20AB, U+20AD-20CF, U+2113, U+2C60-2C7F, U+A720-A7FF; } /* latin */ @font-face { font-family: 'Oswald'; font-style: normal; font-weight: 400; src: url(fonts/Oswald/TK3_WkUHHAIjg75cFRf3bXL8LICs1_FvsUZiZQ.woff2) format('woff2'); unicode-range: U+0000-00FF, U+0131, U+0152-0153, U+02BB-02BC, U+02C6, U+02DA, U+02DC, U+2000-206F, U+2074, U+20AC, U+2122, U+2191, U+2193, U+2212, U+2215, U+FEFF, U+FFFD; } |

That’s it. Of course, if you put them in the main folder or a folder called something other than “fonts” you will need to change that part of the above code.

Localize Font Awesome

Font Awesome is a popular tool for theme designers. The problem is that it is usually referenced from the original external source and thus will produce a warning like our Google font did.

The solution is to load this from your child theme like we did with the Google Font. But, Font Awesome is slightly more complicated than a single Google Font so you will actually need to download it as a .zip file:

https://fortawesome.github.io/Font-Awesome/

Simply unzip it to your child theme (like the Google font, I put it in my fonts folder) and then enqueue it as follows:

1 | wp_enqueue_style( 'font-awesome', get_stylesheet_directory_uri() . '/fonts/font-awesome-4.7.0/css/font-awesome.min.css' ); |

Of course, as with our Google font, we first need to dequeue and deregister the previously enqueued instance. But, here is where it gets interesting. Remember I just said it is an increasingly popular tool? Well, that means your theme and one or more plugins might all load it. In my case, three plugins enqueue Font Awesome. Two use the ‘font-awesome’ handle so if I just dequeued that handle I would have been fine. A third plugin, however, enqueues it with a handle called ssbp-font-awesome so I had to dequeue that one separately.

Here is what my code looks like:

1 2 3 4 5 6 7 8 9 10 | // --- dequeue font-awesome loaded by Simple Share Buttons Adder plugin wp_dequeue_style( 'ssbp-font-awesome' ); wp_deregister_style( 'ssbp-font-awesome' ); // --- dequeue font-awesome wp_dequeue_style( 'font-awesome' ); wp_deregister_style( 'font-awesome' ); // --- enqueue local copy of font-awesome wp_enqueue_style( 'font-awesome', get_stylesheet_directory_uri() . '/assets/fonts/font-awesome-4.7.0/css/font-awesome.min.css' ); |

I just add that to the remove_assets function from before.

That takes care of loading Font Awesome locally, but there are more things you can do to optimize Font Awesome. The easiest thing is to not load all the various webfonts formats by default.

If you look at the font-awesome.css and font-awesome.min.css files you will see that @font-face CSS includes .eot, .woff, .woff2, .ttf, and .svg files. Why? In the answer to a question on StackExchange, Rich Bradshaw offers a good overview of these file types. His advice is that you only need one, preferably .woff2. So, simply edit the font-awesome.min.css file and remove the url('... ...'),code for all but the .woff2 file.

Sharath at WebJeda offers a couple of ways to do get even more Font Awesome size savings. I personally hesitate to do so because plugins may need some of the icons that I would delete because my theme doesn’t need them. But, if you know you have no plugins that rely on it, give it a try.

font-display: swap and preloading.Localize Minified JavaScript Files

A couple of JavaScript files—one from my theme and one from a plugin—are not minified. That’s inefficient and will cause a PageSpeed Insights warning.

Although it sounds a bit odd, my solution is localize these local scripts; that is, make a minified copy in my child theme (in my assets/js/ directory). I could just minify the originals but any update of the theme or plugin would replace the files. Again, I use the remove_assets function.

1 2 3 4 5 6 7 8 9 | // --- dequeue placeholders.js loaded by theme and load my own minified copy wp_dequeue_script( 'theme-placeholders' ); wp_deregister_script( 'theme-placeholders' ); wp_enqueue_script( 'theme-placeholders', get_stylesheet_directory_uri() . '/assets/js/placeholders.min.js' ); // --- dequeue jquery.fancybox-1.3.4.js loaded by Responsive Lightbox plugin and load my own minified copy wp_dequeue_script( 'responsive-lightbox-fancybox' ); wp_deregister_script( 'responsive-lightbox-fancybox' ); wp_enqueue_script( 'responsive-lightbox-fancybox', get_stylesheet_directory_uri() . '/assets/js/jquery.fancybox-1.3.4.min.js' ); |

So, now my function looks like:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | function remove_assets() { // --- dequeue tab widget since I don't use it wp_dequeue_script( 'theme_tab_widget' ); wp_dequeue_style( 'theme_tab_widget' ); // --- dequeue Contact Form 7 script on every page except contact page if ( !is_page("contact") ) { wp_dequeue_script( 'contact-form-7' ); } // --- dequeue default google font wp_dequeue_style( 'theme-google-font-default' ); // --- dequeue font-awesome loaded by Simple Share Buttons Adder plugin wp_dequeue_style( 'ssbp-font-awesome' ); wp_deregister_style( 'ssbp-font-awesome' ); // --- dequeue font-awesome wp_dequeue_style( 'font-awesome' ); wp_deregister_style( 'font-awesome' ); // --- enqueue local copy of font-awesome wp_enqueue_style( 'font-awesome', get_stylesheet_directory_uri() . '/assets/fonts/font-awesome-4.3.0/css/font-awesome.min.css' ); // --- dequeue placeholders.js loaded by theme and load my own minimized copy wp_dequeue_script( 'theme-placeholders' ); wp_deregister_script( 'theme-placeholders' ); wp_enqueue_script( 'theme-placeholders', get_stylesheet_directory_uri() . '/assets/js/placeholders.min.js' ); // --- dequeue jquery.fancybox-1.3.4.js loaded by Responsive Lightbox plugin and load my own minimized copy wp_dequeue_script( 'responsive-lightbox-fancybox' ); wp_deregister_script( 'responsive-lightbox-fancybox' ); wp_enqueue_script( 'responsive-lightbox-fancybox', get_stylesheet_directory_uri() . '/assets/js/jquery.fancybox-1.3.4.min.js' ); } add_action( 'wp_enqueue_scripts', 'remove_assets', 99999 ); |

Localize Google Analytics

Google Analytics is another external file that will cause PageSpeed Insights failures and thus is worth localizing. Unfortunately, unlike fonts, the GA file gets updated somewhat frequently. Thus, it’s not enough to just manually download a copy as we will surely forget to update it regularly. Instead, we need a way to automatically download new copies on a schedule.

As with Google fonts, when I first wrote this guide there was no plugin to do this so I wrote my own code and run it via a cron job twice a week. I will list that below without explaining it for anyone interested. But, now there is a good GA localization plugin called Complete Analytics Optimization Suite (CAOS). My code has worked well for me for 5+ years so I haven’t tried it, but it looks excellent.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | // ------------------------------------------------------------------------------- // --- Localize external scripts to avoid Google PageSpeed Insights warnings // --- Note: I am localizing two different GA files and Google AdSense file // ------------------------------------------------------------------------------- function localize_external_scripts () { // --- array of all the external files we want to localize and whether they should be minimized or not (minify, orig url, filename,handle) $external_files = array(); $external_files[] = array("nominify","http://www.google-analytics.com/analytics.js","analytics.js",""); $external_files[] = array("nominify","http://www.googletagmanager.com/gtag/js?id=".$GLOBALS['ua_id'],"gtag.js",""); $external_files[] = array("nominify","http://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js","adsbygoogle.js",""); $i = 0; foreach ($external_files as $external_file) { $i++; $url = $external_file[1]; $filename = get_stylesheet_directory() . "/js-external/" . $external_file[2]; $handle = $external_file[3]; $body .= "<strong>Filename</strong>: " . $external_file[2] . "<br />"; // --- Write to local file clearstatcache(); $src = fopen($url, 'r') or $body .= "File does not exist - <strong>PLEASE INVESTIGATE</strong><br>"; $dest = fopen($filename, 'w'); $bytescopied = stream_copy_to_stream($src, $dest) . " bytes copied.\n"; $body .= " ... " . $bytescopied . "<br />"; // --- Get file content $filecontent = file_get_contents($filename); // --- replace reference to Google's server to my localized copy of analytics.js $filecontent = str_replace("https://www.google-analytics.com/analytics.js", get_template_directory_uri() . "/js-external/analytics.js", $filecontent); $filecontent = str_replace("//www.google-analytics.com/analytics.js", get_template_directory_uri() . "/js-external/analytics.js", $filecontent); // --- write the entire string file_put_contents($filename, $filecontent); // --- Just to be safe, check that file has content $contents = file_get_contents($filename); if($contents === FALSE) $body .= " ... <strong>WARNING</strong>: couldn't read this file ... check to make sure everything is OK<br />"; if (strpos($contents, "function") === FALSE) { $body .= " ... <strong>WARNING</strong>: this file has no <code>function</code> ... check to make sure everything is OK<br />"; } else { $body .= " ... This file has a <code>function</code> ... everything seems OK<br />"; } if ($i<count($external_files)) $body .= "<br />"; } return $body; } |

Daan van den Bergh, the developer of the CAOS plugin, wrote a useful article exploring the differences between analytics.js, gtag.js & ga.js. The quick summary is that

ga.js is old so don’t bother using it. It was replaced by analytics.js and that mostly just does analytics so is probably your best best for page speed optimization. analytics.js is still active but gtag.js is what Google recommends using now. If you want to integrated Google services besides Google Analytics or you want to do advanced campaign or usage tracking, gtag.js is what you want. Otherwise, stick with analytics.js.If you decide to switch to the new gtag GA tracking code, there is one big page speed issue to be aware of. The Remarketing and Advertising Reporting Features provide demographic information on your site’s visitors. This feature is not enabled by default but if you enable it (in the Tracking Code -> Data Collection section of GA), you will get a Minimize Redirects warning from Pingdom.

As far as I can tell, there is no way to keep this feature and eliminate the warning. So, your choices are: accept a redirect chain penalty or stop hosting the GA script locally and accept a Leverage Browser Caching error.

Breaking Down PageSpeed Insights Tests

Now let’s turn our attention to code modifications you can make to bump up your PageSpeed Insights score. To do so, let’s take a look at what PSI (Lighthouse) cares about and discuss solutions for each.

In addition to presenting your overall performance score, the PageSpeed Insights results page lists the Lighthouse performance audits in four sections: Lab Data (Metrics), Opportunities, Diagnostics, and Passed Audits.

PageSpeed Insights Lab Data (Metrics)

Lab data presents the Lighthouse test results used to calculate your overall performance score. Let’s take a look at these tests.

In late May 2020, PageSpeed Insights switched from Lighthouse version 5 to version 6, making some significant changes. Most notably, it replaced the First Meaningful Paint (FMP), First CPU Idle, and Max Potential First Input Delay (Max FID) audits with the Largest Contentful Paint (LCP), Total Blocking Time and Cumulative Layout Shift audits. The weighting changed as well.

| Test | v5 Weight | Test | v6 Weight |

| FCP (First Contentful Paint) | 20% | FCP (First Contentful Paint) | 15% |

| SI (Speed Index) | 26.7% | SI (Speed Index) | 15% |

| TTI (Time to Interactive) | 33.3% | TTI (Time to Interactive) | 15% |

| FMP (First Meaningful Paint) | 6.7% | LCP (Largest Contentful Paint) | 25% |

| FCI (First CPU Idle) | 13.3% | TBT (Total Blocking Time) | 25% |

| CLS (Cumulative Layout Shift) | 5% |

First Contentful Paint (FCP)

The PageSpeed Insights (Lighthouse) First Contentful Paint metric, displayed in seconds, measures how long it takes the browser to render the first piece of DOM content after a user navigates to your page. Images, non-white <canvas> elements, and SVGs on your page are considered DOM content; anything inside an iframe isn’t included.

Your FCP score is a comparison of your page’s FCP time and FCP times for real websites, based on data from the HTTP Archive. For example, sites performing in the ninety-ninth percentile render FCP in about 1.5 seconds. If your website’s FCP is 1.5 seconds, your FCP score is 99.

This table shows how to interpret your FCP score:

| FCP time (in seconds) | Color-coding | FCP score (HTTP Archive percentile) |

|---|---|---|

| 0–2 | Green (fast) | 75–100 |

| 2–4 | Orange (moderate) | 50–74 |

| Over 4 | Red (slow) | 0–49 |

Font Loading

One issue that’s particularly important for FCP is font load time. Some browsers hide text until the font loads, causing a flash of invisible text (FOIT). There are two ways to avoid this: one is very simple but does not have universal browser support; the second is more complicated but has full browser support.

Option #1: Use font-display

The simple solution is to temporarily show a system font by including font-display: swap in your @font-face style.

1 2 3 4 5 6 7 | @font-face { font-family: 'Pacifico'; font-style: normal; font-weight: 400; src: local('Pacifico Regular'), local('Pacifico-Regular'), url(https://fonts.gstatic.com/s/pacifico/v12/FwZY7-Qmy14u9lezJ-6H6MmBp0u-.woff2) format('woff2'); font-display: swap; } |